| Visitors Now: | |

| Total Visits: | |

| Total Stories: |

| Story Views | |

| Now: | |

| Last Hour: | |

| Last 24 Hours: | |

| Total: | |

GMO Statistics Part 33. Headstrong old men can hold up science for decades

This post records how science went down a long unproductive alleyway when it tackled a concept that the human brain intuitively takes for granted, how to work out namely cause and effect in complicated everyday situations. Yes dear reader, scientists can be wrong, indeed very wrong.

One problem with big mistakes in scientific logic is that they can take a long time to fix. They often need a revolution. In this case it was the Bayesian revolution, which happened about 1995 (although many headstrong old scientists never noticed). Perhaps the best introduction to this revolution is provided in Nate Silver’s great little book The Signal and the Noise, and in this book young Nate quite properly says some rather harsh home truths about a few headstrong old scientists. For another good lead in, why not try Judea Pearl’s web pages (sampled below), and then, maybe his book Causality if you are really keen.

The world needs a few more young Turks.

Judea Pearl presentations![]() Transcript and slides of 1996 Faculty Research Lecture: The Art and Science of Cause and Effect

Transcript and slides of 1996 Faculty Research Lecture: The Art and Science of Cause and Effect ![]() Transcript and slides of 1999 IJCAI Award Lecture: Reasoning with Cause and Effect

Transcript and slides of 1999 IJCAI Award Lecture: Reasoning with Cause and Effect

SLIDE 1: THE ART AND SCIENCE OF CAUSE AND EFFECT

Judea Pearl 1996.

Thank you Chancellor Young, colleagues, and members of the Senate Selection Committee for inviting me to deliver the eighty-first lecture in the UCLA Faculty Research Lectureship Program. It is a great honor to be deemed worthy of this podium, and to be given the opportunity to share my research with such a diverse and distinguished audience.

The topic of this lecture is causality – namely, our awareness of what causes what in the world and why it matters. Though it is basic to human thought, Causality is a notion shrouded in mystery, controversy, and caution, because scientists and philosophers have had difficulties defining when one event TRULY CAUSES another. We all understand that the rooster’s crow does not cause the sun to rise, but even this simple fact cannot easily be translated into a mathematical equation.

Today, I would like to share with you a set of ideas which I have found very useful in studying phenomena of this kind. These ideas have led to practical tools that I hope you will find useful on your next encounter with a cause and effect.

And it is hard to imagine anyone here who is NOT dealing with cause and effect. Whether you are evaluating the impact of bilingual education programs or running an experiment on how mice distinguish food from danger or speculating about why Julius Caesar crossed the Rubicon or diagnosing a patient or predicting who will win the 1996 presidential election, you are dealing with a tangled web of cause-effect considerations. The story that I am about to tell is aimed at helping researchers deal with the complexities of such considerations, and to clarify their meaning.

…

…SLIDE 33: KARL PEARSON (1934)

Thus, Pearson categorically denies the need for an independent concept of causal relation beyond correlation. He held this view throughout his life and, accordingly, did not mention causation in ANY of his technical papers. His crusade against animistic concepts such as “will” and “force” was so fierce and his rejection of determinism so absolute that he EXTERMINATED causation from statistics before it had a chance to take root.

SLIDE 34: SIR RONALD FISHER

It took another 25 years and another strong-willed person, Sir Ronald Fisher, for statisticians to formulate the randomized experiment – the only scientifically proven method of testing causal relations from data, and which is, to this day, the one and only causal concept permitted in mainstream statistics.

And that is roughly where things stand today… If we count the number of doctoral theses, research papers, or textbooks pages written on causation, we get the impression that Pearson still rules statistics. The “Encyclopedia of Statistical Science” devotes 12 pages to correlation but only 2 pages to causation, and spends one of those pages demonstrating that “correlation does not imply causation.”

Let us hear what modern statisticians say about causality

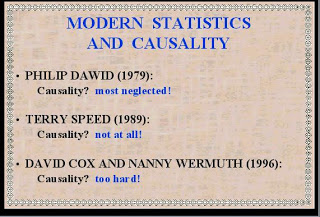

SLIDE 35: MODERN STATISTICS AND CAUSALITY

Philip Dawid , the current editor of Biometrika-the journal founded by Pearson – admits: “causal inference is one of the most important, most subtle, and most neglected of all the problems of statistics”. Terry Speed, former president of the Biometric Society (whom you might remember as an expert witness at the O.J. Simpson murder trial), declares: “considerations of causality should be treated as they have always been treated in statistics: preferably not at all, (but if necessary, then with very great care.)” Sir David Cox and Nanny Wermuth, in a book published just a few months ago, apologize as follows: “We did not in this book use the words CAUSAL or CAUSALITY…. Our reason for caution is that it is rare that firm conclusions about causality can be drawn from one study.”

This position of caution and avoidance has paralyzed many fields that look to statistics for guidance, especially economics and social science. A leading social scientist stated in 1987: “It would be very healthy if more researchers abandon thinking of and using terms such as cause and effect.”

Can this state of affairs be the work of just one person? even a buccaneer like Pearson? I doubt it.

But how else can we explain why statistics, the field that has given the world such powerful concepts as the testing of hypothesis and the design of experiment would give up so early on causation?

One obvious explanation is, of course, that causation is much harder to measure than correlation. Correlations can be estimated directly in a single uncontrolled study, while causal conclusions require controlled experiments.

But this is too simplistic; statisticians are not easily deterred by difficulties and children manage to learn cause effect relations WITHOUT running controlled experiments. The answer, I believe lies deeper, and it has to do with the official language of statistics, namely the language of probability. This may come as a surprise to some of you but the word “CAUSE” is not in the vocabulary of probability theory; we cannot express in the language of probabilities the sentence, “MUD DOES NOT CAUSE RAIN” – all we can say is that the two are mutually correlated, or dependent – meaning if we find one, we can expect the other. Naturally, if we lack a language to express a certain concept explicitly, we can’t expect to develop scientific activity around that concept. Scientific development requires that knowledge be transferred reliably from one study to another and, as Galileo has shown 350 years ago, such transference requires the precision and computational benefits of a formal language.

Philip Dawid , the current editor of Biometrika-the journal founded by Pearson – admits: “causal inference is one of the most important, most subtle, and most neglected of all the problems of statistics”. Terry Speed, former president of the Biometric Society (whom you might remember as an expert witness at the O.J. Simpson murder trial), declares: “considerations of causality should be treated as they have always been treated in statistics: preferably not at all, (but if necessary, then with very great care.)” Sir David Cox and Nanny Wermuth, in a book published just a few months ago, apologize as follows: “We did not in this book use the words CAUSAL or CAUSALITY…. Our reason for caution is that it is rare that firm conclusions about causality can be drawn from one study.”…

2012-12-04 14:03:58

Source: http://gmopundit.blogspot.com/2012/12/gmo-statistics-part-33-headstrong-old.html

Source: