| Visitors Now: | |

| Total Visits: | |

| Total Stories: |

| Story Views | |

| Now: | |

| Last Hour: | |

| Last 24 Hours: | |

| Total: | |

Comments On The Climate Etc Post “CMIP5 Decadal Hindcasts”

Pielke Sr Research Group: News and Commentary

Judy Curry has an excellent new paper

Citation: Kim, H.-M., P. J. Webster, and J. A. Curry (2012), Evaluation of short-term climate change prediction in multi-model CMIP5 decadal hindcasts, Geophys. Res. Lett., 39, L10701, doi:10.1029/2012GL051644. [supplemental material]

which she posted on at Climate Etc

I made a suggestion in the comments on her weblog, which I want to also post here on my weblog. First, one of the benchmark which the dynamical model predictions of atmospheric-ocean circulation features must improve on is clearly captured in the seminal paper

Landsea,Christopher W. and ,John A. Knaff, 2000: How Much Skill Was There in Forecasting the Very Strong 1997–98 El Niño? Bulletin of the American Meteorological Society Volume 81, Issue 9 (September 2000) pp. 2107-2119.

As they wrote

“A …….simple statistical tool—the El Niño–Southern Oscillation Climatology and Persistence (ENSO–CLIPER) model—is utilized as a baseline for determination of skill in forecasting this event”

and that

“….more complex models may not be doing much more than carrying out a pattern recognition and extrapolation of their own.”

Using persistence which means that the benchmark assumes that the initial values remain constant is not a sufficient test. Persistance-climatology is the more appropriate evaluation benchmark in which a continuation of a cycle whose future is predicted based on past statistical behavior, given a set of initial conditions. This is what Landsea and Knaff so effectively reported on in their paper for ENSO events. Real world observations, of course, provide the ultimate test of any model prediction, and the reanalysis products, as Kim et al 2012 have done is the best choice.

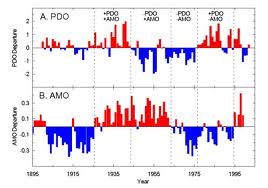

I recommend, therefore, that Kim et al extend their evaluation to use this benchmark in which the degree to which the CMIP5 decadal predictions can improve on a statistical forecast of the Atlantic Multidecadal Oscillation (AMO) and Pacific Decadal Oscillation (PDO) is examined.

However, it is important to realize, that the CMIP5 runs also need to be tested in terms of their ability to predict changes in the statistical behavior of the AMO and PDO.

Dynamic models need to improve on that skill (i.e. accurately predict changes in this behavior) if those models are going to add any predictive (projection) value in response to human climate forcings. The Kim et al 2012 paper is another valuable, much needed assessment of global model prediction skill. However, the ability of the CMIP5 models to predict changes in the climatological behavior is also needed. Of course, the time period required of observed data is long enough to adequately develop the statistics.

Climate Science: Roger Pielke Sr

Read more at Climate Science: Roger Pielke Sr

Source: